Building a Working VQA learning model in Python

A Visual Question Answering (VQA) model is a system that answers questions about an image, using a combination of natural language processing and computer vision

All my code for this project

Soon I will be working at an internship for Gentian.io involving geolocation, geospatial data, and building/tuning LLMs, so I wanted to create a functional template for a VQA image analyzing model that I can progressively build stronger.

I began by using Tensorflow and built a very straightforward model where I could load in a question, and a few potential answers. To start off, I used:

Question: “What color is the ball?”

Answers: “Red”, “Blue”, “White”, “Yellow”, “Purple”, etc

I then loaded images into the model, as seen below.

First Image:

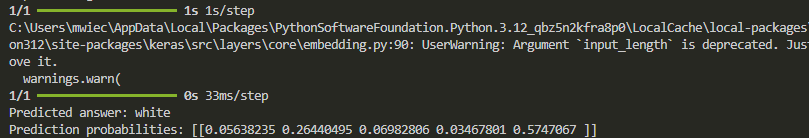

Result:

Second Image:

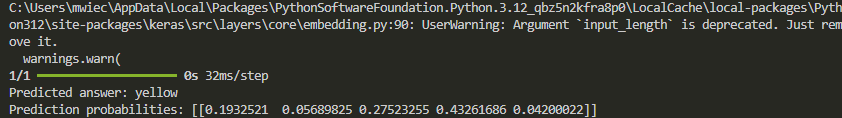

Result:

Third Image:

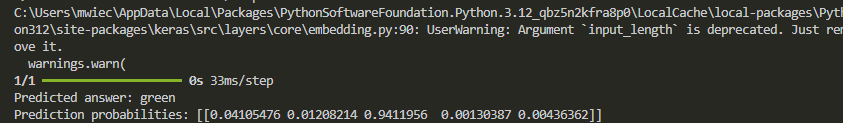

Result:

This model was semi-accurate, getting 8/10 tests correct, with the 2 incorrect tests having a high probability of the correct (unchosen) answer.

My next step was to expand the model to understand more parameters

Parameters:

Why this is important: To create more specified instances or classes of existing entities, the process would go as follows:

- Use the given model to determine color, object, shape, etc

- Use the given values to create a probability comparison of whether or not something in the image is what it is (i.e. I think this is a red cube, does the color and shape match?)

- Add any number of specific objects relevent to the task at hand and assign them values of each trait, allowing for fine-tuned models and specific tasks

From here, I tested both object and shape, both which worked perfectly. The logic within my code goes as follows:

The Euclidean distance between two RGB colors is calculated as: \[ d = \sqrt{(r_1 - r_2)^2 + (g_1 - g_2)^2 + (b_1 - b_2)^2} \]

Where: \[ \begin{aligned} r_1, g_1, b_1 & : \text{ RGB values of the detected dominant color} \\ r_2, g_2, b_2 & : \text{ RGB values of the closest standard color} \end{aligned} \]

Technologies Used

- Python

- Opencv

- Torch

- Sklearn

Sources

https://visualqa.org/

https://huggingface.co/tasks/visual-question-answering